The Challenge

Some time ago, a major private television in Europe experienced an incident that outwit all their contingency arrangements. The headquarters of this TV station consist of several buildings sprawled across a large campus. Main playout and disaster recovery playout were in facilities located at opposite sides of the lot, the former in a first floor and the latter in a bunker-type basement with its own independent power supply consisting of feed points with two different electricity companies and a diesel electric generator. Create the disaster recovery site had been expensive but everybody saw it as a good investment since a premium television cannot afford interruptions of emission that damage its prestige, its audience and the income. But in spite of all the efforts devoted to its construction, the disaster recovery site proved useless when was needed.

The disaster

One day, an episode of extremely heavy rain caused a clogging in the drain system and swamped the campus with a layer of water that in some areas reached nearly half foot. When after some hours under water the power supply of the main playout failed, the playout engineers knew emission would go to black. There was no way to switch to the superb disaster recovery playout because being in a basement it has been the very first part of the campus to break down. Station was not off-air for long because switched to a regional branch while hastily restoring power supply to the main building, but was a night to remember for everybody involved.

After the forensics of the fateful night were written, many options were proposed. It was possible to look for a lot in the top of a hill, it was possible to improve the draining of the campus up to the point that can survive epical deluges, it was possible to waterproof the basement until becomes more hermetic than a submarine, and was possible to build a two stories bunker to locate the disaster recovery on the second floor. However, these brute force approaches were very expensive and not really satisfactory. Someone came out with the idea of locating the disaster recovery in the cloud and after some consideration it was decided to explore this possibility in earnest. They were traditional broadcast engineers who have always seen the cloud as something abstract and remote but who now realized that this remote and abstract place was the ideal place to locate disaster recovery playout, sheltered from any danger. After a thorough process of selection VBox Disaster Recovery (VBox DR) from Vector 3 was chosen to place emission in the cloud, far beyond physical danger but so immediately accessible from any computer, tablet or cell phone.

The solution

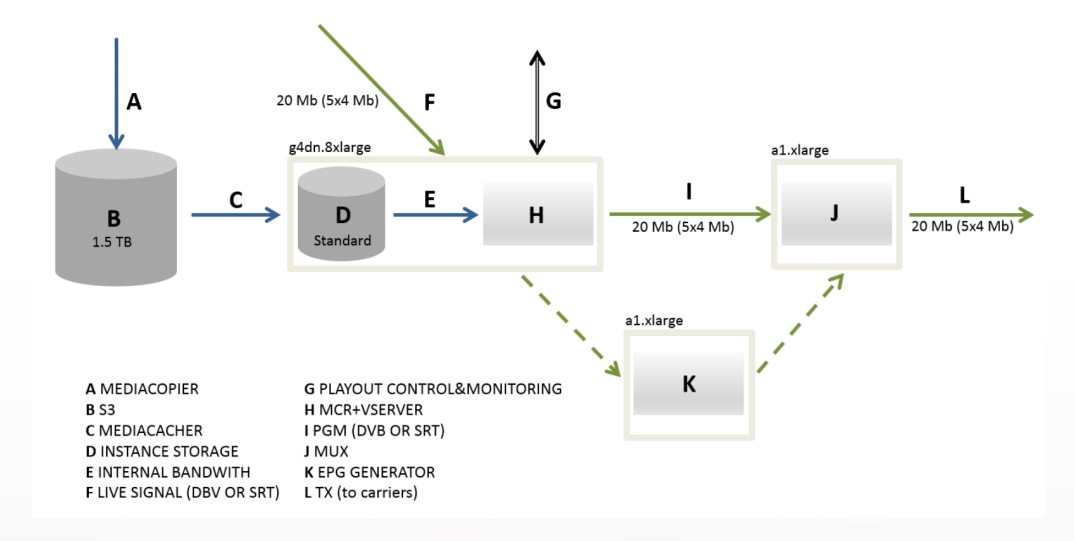

The proposed, approved and implemented solution consisted in a VBox DR system working in instances in the AWS cloud already owned by the customer. Customer was already using cloud as repository and long-term archive for his video files and the new disaster recovery playout was installed in instances belonging to this AWS cloud. This has two key advantages: first the files do not need to abandon the cloud environment under the control of the customer thus avoiding legal implications with third parties (i.e. production companies, rights owners, etc). Second, because video files were already downloaded, Vector 3’s Mediamanager can fetch them from the S3 storage to the playout instances using the playlists. For last minute video files a special procedure was established that copy from the on-premise NAS storage to a dedicated folder, following the same path that is used for the playlists of next days. The playlist of the current day is downloaded each time that changes or in any case at least once on the hour to be sure is synchronized with the one on air in the main third-party system. In this way, the disaster recovery system can mimic the main emission at any time.playout was installed in instances belonging to this AWS cloud. This has two key advantages: first the files do not need to abandon the cloud environment under the control of the customer thus avoiding legal implications with third parties (i.e. production companies, rights owners, etc). Second, because video files were already downloaded, Vector 3’s Mediamanager can fetch them from the S3 storage to the playout instances using the playlists. For last minute video files a special procedure was established that copy from the on-premise NAS storage to a dedicated folder, following the same path that is used for the playlists of next days. The playlist of the current day is downloaded each time that changes or in any case at least once on the hour to be sure is synchronized with the one on air in the main third-party system. In this way, the disaster recovery system can mimic the main emission at any time.

This disaster recovery playout has full channel branding, with graphics, dynamic text insertion, squeeze backs and other DVEs. The system also performs reading and insertion of SCTE35 both automatically and manually. It generates asrun logs compatible with the scheduling and program recon systems of the customer as well as reports in a format compatible with the customer sales and advertising software. All the operator activity while logged is registered continuously, archived and made accessible on request to authorised administrators. Also, for incident diagnosis or maintenance purposes, all the technical information concerning the working of the different applications is registered, time stamped and archived to be made accessible if needed.

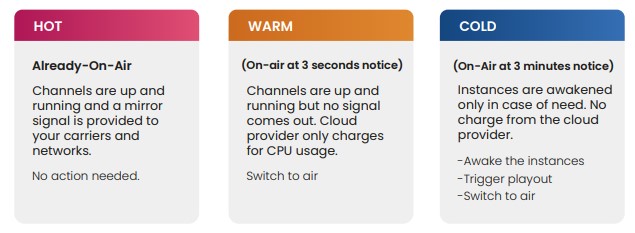

The system has three possible status of activity that correspond to the three levels of costing used in the contract with AWS.

- The first one is called HOT and in this status the system is fully operative delivering to the carriers a signal identical to the one furnished by the main third-party system (mirror).

- The second status is called WARM and consists in the system playing out but with the signal not going out of the instances. In this WARM state the system can deliver a signal one second after asked for, activating the switching point in the output. The third status is called COLD and is extremely cheap since no AWS resource is used so no charge is made.

- To go from COLD status to HOT the system needs between three and five minutes depending on how many channels are involved. Operators can decide at any time in which status they want the complete system or each group of four channels. In normal circumstances the system can be operated from the on-premise master control room as if were played out from the on-premise rack room. In case of emergency it can be invoked from anywhere by whoever has the credentials.

A certain number of specially set-up laptops are stored in different locations (some of them out of the campus). Just booting these laptops operators gain control of the emission. In case the problems in the main playout are expected to last, some studios in outpost locations are prepared to send by SRT the signal so live programmes can be produced. Analogously, the U-van can connect by the same means so sports events can be aired without interruption. All the contingency procedures have been carefully stablished and very specially the ones to communicate the different carriers and networks (digital terrestrial, satellite uplinks, cable and CDNS) which protocol of confirmation must follow to switch from the links used by the regular playout to the incoming SRT signal coming from the disaster recovery in cloud.

The playout engineer in charge of the project stated which was the criteria to select the concept and the software proposed by Vector 3.

“We wanted a system that works on known technologies and works in our own cloud so we can administer ourselves with our personnel. We did not want to use a third party hosting with cloud playout as we do not wanted to use hosting with our regular playout. We want to feel that we have our emission under our aegis and we can manipulate without interacting with third parties or learn abstruse technologies.”